What's Ap?

Introduction

This post is aimed at the Scala programmer who is familiar with the basics of

the language and ideally becoming interested, or even deeply entrenched, in

pure functional programming and curious about Applicative Functors. We'll

delve into the original paper in which they were introduced, converting the

Haskell code to modern Scala, both custom code and using Cats, Cats Effect and

Zio. Finally, we'll look at an efficient implementation of blending two images

using Applicative programming.

Functor and Monad

As a Scala programmer, you will be no doubt be familiar with the map and

flatMap functions, which you will find in some of the collections and other

data types in the standard Scala library. If you're interested in pure

functional programming, and have used the Cats or Scalaz libraries, you may know

that these two functions are part of the Functor and Monad type classes

respectively.

Before looking at Apply and Applicative let's review what you can do

Functors and Monads.

Remember, the goal of functional programming is to do most of our work using

pure functions. We use structures like Functors and Monads to manage effects

(things that are not pure); letting us use pure functions in an effectful world.

The Functor type class gives us the power to take a pure function like a => a +

1 and apply it to the value inside an effect. Here are a couple of examples of

using Functor's map on two data types…

List(1,2,3).map(a => a + 1) // res: List[Int] = List(2, 3, 4) Option(1).map(a => a + 1) // res: Option[Int] = Some(2)

Functor gives you the ability to reach inside an effect, apply a pure

function to the value inside there, and wrap it up inside an effect of the same

type. The type signature of map is map[A,B](fa: F[A], f: A => B): F[B].

Monad instances have two functions. The first, pure or unit, gives us a way

to lift pure values into an effectful context. pure[A](a: A) F[A] and you can

think of it as being a type constructor…

import cats._ import cats.implicits._ 1.pure[List] // res: List[Int] = List(1) 1.pure[Option] // res: Option[Int] = Some(1)

Pure isn't really giving us something we didn't already have; we could make a list and an option before. But the pure function is useful as a building block when building code that uses Monad instances. We'll see it in use later.

Finally, Monad has the flatMap function. The signature is flatMap[A,B](fa:

F[A], f: A => [B]): F[B]. flatMap comes in handy when we compose two effects

together. It let's you get the result from the first and pass it as a (pure) parameter

to the next. For example, imagine we have two calls that go out over the network

to a database or external service and we use map to chain them together…

def getUser(email: String): Future[User] = ??? def getAccountStatus(id: String): Future[AccountStatus] = ??? val accountStatus = getUser("bob@google.com") .map(user => getAccountStatus(user.accountId)) // accountStatus has type Future[Future[AccountStatus]]

Dealing with nested effects like Future[Future[_]] creates a burden on us to

reach inside the layers one at a time. If we used flatMap instead it would take

care of flattening the result for us…

val accountStatus = getUser("bob@google.com") .flatMap(user => getAccountStatus(user.accountId)) // accountStatus has type Future[AccountStatus]

That is the essence of Monads; being able to compose effects together. Note

that the second call is dependent on the first. It would make no sense to call

getAccountStatus before we called getUser because we need the user's account

ID. In fact, even if these two effects were completely independent, we would

still have to wait for the first one before calling the second. That's not an

ideal situation because these calls may take tens or even a few hundred

milliseconds. If we want the service to be low latency, we would like to run

these calls concurrently instead of in sequence.

What's Ap?

Now we're caught up Functors and Monads, let's look at the Applicative

typeclass. It is defined as follows in Cats, with some details removed…

trait Applicative[F[_]] extends Apply[F] { def pure[A](x: A): F[A] }

If you make an instance of Applicative then you need to supply an implementation

of pure which is exactly the same as pure found in Monads. You also need to

implement Apply which looks like this…

trait Apply[F[_]] extends Functor[F] { def ap[A, B](ff: F[A => B])(fa: F[A]): F[B] }

You can see that Apply extends Functor which means it has map. Also it has the

function ap which is, of course, the main subject of this post. What a curious

type signature! Just like with map we are dealing with an effect type F, and

a parameter F[A]. The difference is the function we want to apply (ff: F[A

=> B]) is also inside the effect.

Before talking about what this is useful for, let's look at what it actually does for various implementations.

Applicative instance for Option

Option((a:Int) => a + 10).ap(Option(20)) // res: Option[Int] = Some(30) Option((a:Int) => a + 10).ap(None) // res: Option[Int] = None Option.empty[Int => Int].ap(Option(20)) // res: Option[Int] = None Option.empty[Int => Int].ap(Option.empty[Int]) // res: Option[Int] = None

The ap function for Option, then, behaves probably as you'd expect. When you

apply the function, if the ff argument is None then there's nothing to

apply and we get the result None. If there is a function in there, we extract

it so that we have a pure function that we can apply to the effectful

argument F[A]. Again, if that is empty we get None, otherwise, we get the

value f(a) which will be wrapped back up in the effect giving Some(30).

Applicative instance for List

List((a:Int) => a + 1, (a:Int) => a - 10, (a:Int) => a + 22).ap(List(1,2,3)) // res: List[Int] = List(2, 3, 4, -9, -8, -7, 23, 24, 25)

For List the input value for ff has the signature List[A => B], and each

function in the list is applied to each argument in the input list.

Idomaticly apply all the things

All data types that have instances of Applicative have a way to apply a

function wrapped in an effect of that type, and the way that it is applied is

idomatic to that effect. In fact, before the name Applicative Functor stuck,

they were called Idioms.

You may be scratching your head at this point, for it's not often in programming

that you want to apply a list of functions to a list (although I'm sure you can

probably come up with some ways to use it), and how often do you have functions

in Options? It gets weirder in the case of other data types. With Future, or

IO, for example, do you ever remember writing a function that returns a

function from a Future? It's certainly rare. Still more strange would be a

function inside a State monad, but that is perfectly valid too…

import cats.data.State // Create a function in a State val fs = State[Int, (Int => Int)] (s => (s, (a: Int) => a + s)) // Now to apply it to an appropriate State val applied = fs.ap(State[Int, Int](s => (s,10))) // Finally run the Applicative State and grab the value applied.run(10).value //res: (Int, Int) = (10, 20)

I don't think you can get the answer to "What's Ap" just by looking at type signatures and examples. To really understand applicative style in depth I thought I would walk through the seminal paper on the subject and get it from the originators…

Applicative Programming with Effects

Let's Apply ourselves

Applicative Functors first saw the light of day in the 2008 paper by McBride and Patterson, "Applicative Programming with Effects" which you can find here…

https://www.staff.city.ac.uk/~ross/papers/Applicative.pdf

The paper begins with three motivating examples for the use of Applicative style…

This is the story of a pattern that popped up time and again in our daily work, programming in Haskell (Peyton Jones, 2003), until the temptation to abstract it became irresistible. Let us illustrate with some examples.

We'll walk through each of these examples and convert them to use Scala…

Sequencing Commands

One often wants to execute a sequence of commands and collect the sequence of their responses, and indeed there is such a function in the Haskell Prelude (here specialised to IO)

sequence :: [IO a ] → IO [a ] sequence [ ] = return [ ] sequence (c : cs) = do x ← c xs ← sequence cs

Before we get started, if you're following along in your Scala IDE or REPL you will need some imports listed below. You can also clone the Github repository.

import zio._ import zio.console._ import zio.clock._ import zio.duration._ import cats.Applicative import cats.implicits._

… and the following libraries …

libraryDependencies ++= Seq( "org.typelevel" %% "cats-core" % "2.1.1", "dev.zio" %% "zio" % "1.0.0-RC18")

I am using ZIO in place of Haskell's IO Monad, and bringing in Cats to use its Applicative.

Converting the sequence function from Haskell to Scala…

def monadicSequence[Z,E,A](ios: List[ZIO[Z, E, A]]): ZIO[Z, E, List[A]] = { ios match { case Nil => zioApplicative.pure(List.empty[A]) case c :: cs => for ( x <- c; xs <- monadicSequence(cs) ) yield (x +: xs) } }

If you're not familiar with ZIO you can think of it as a replacement for the standard library

Scala Future, but it has better performance and a lot more features. It is

also not eagerly evaluated like Future. To explain, when you create a future it runs

immediately and you cannot run it again. You can create a ZIO and run it when

you decide to and as many times as you want.

To demonstrate this sequence running let's write an implementation of a silly

algorithm called Sleep Sort. Sleep Sort works by waiting an amount of time based

on the value of the number. Emitting the numbers in this way sorts them

(assuming your scheduler is accurate enough). Let's be clear, this is a stupid

way to sort numbers, but it's handy as a way to illustrate our monadicSequence

function.

def delayedPrintNumber(s: Int): ZIO[Console with Clock,String,Int] = { putStrLn(s"Preparing to say number in $s seconds") *> putStrLn(s"$s").delay(s.seconds) *> ZIO.succeed(s) } val ios1 = List(6,5,2,1,3,8,4,7).map(delayedPrintNumber) // ios1: List[ZIO[Console with Clock,String,Int]]

The function creates an IO effect, which when run will immediately print a

message and then wait s seconds before printing the number. We map the

function across a list of numbers to generate a list of IO effects, which we can

then run.

You may be surprised that this does not work. Instead of running all the effects at once and printing them out in order it just executes the first IO (wait 6 seconds), then the second (wait 5 seconds).

Monadic version Preparing to say number in 6 seconds 6 Preparing to say number in 5 seconds 5 // ... and so on for a while

If you were not surprised maybe you're ahead of me, and know that our

monadicSequence function cannot possibly run all the effects at once by virtue

of it being monadic in the first place.

That for comprehension is really hiding that we are calling flatMap on each

successive IO, and flatMap sequences things together. You must wait for the

result of the first effect before you can evaluate the second. So whilst the

first implementation of sequence in the paper will absolutely work, it will

not let us implement our sleep sort, nor let us parallelize the IO's in general.

Back to the paper, at this point the authors observe…

In the (c : cs) case, we collect the values of some effectful computations, which we then use as the arguments to a pure function (:). We could avoid the need for names to wire these values through to their point of usage if we had a kind of ‘effectful application’.

By effectful application they are talking about the ap function, and they go

on to say that it lives in the Haskell Monad library. Given that function they

rewrite the sequence function as follows…

sequence :: [IO a ] → IO [a ] sequence [ ] = return [ ] sequence (c : cs) = return (:) ‘ap‘ c ‘ap‘ sequence cs

Except for the noise of the returns and aps, this definition is in a fairly standard applicative style, even though effects are present.

Note that the ap they are using here is in the Monad library, and implemented

using flatMap, so it will not yet allow our sleep sort to work. However, I've

implemented an Applicative instance for ZIO which does not have that

limitation…

implicit def zioApplicative[Z,E] = new Applicative[ZIO[Z,E,?]] { def pure[A](x: A) = ZIO.succeed(x) def ap[A, B](ff: ZIO[Z,E,A => B])(fa: ZIO[Z,E,A]) = { map2(ff, fa){ (f,a) => f(a) } } override def map2[A, B, C](fa: ZIO[Z,E,A], fb: ZIO[Z,E,B])(f: (A, B) => C) : ZIO[Z,E,C] = { fa.zipPar(fb).map{case (a,b) => f(a,b)} } }

It's not important to understand all the details here, all you need understand

is we now have an ap that we can apply to ZIO effects that is truly parallel,

so if you're not interested then skip to the next paragraph.

The pure function is straightforward, it just wraps a pure value in a

succeeded ZIO. The ap function is more interesting. Whilst it's not obvious

how you would implement ap in for ZIO, it is really easy to implement map2.

map2 comes in handy because it lets you take the results of two effects and

pass them to a pure function. The function has the signature f: (A, B) => C.

We use the ZIO function zipPar to execute the two effects in parallel, and

if both fa and fb yield values then they are mapped with the pure function

giving us a ZIO with the final result inside. Happily, you can implement ap in

terms of map2, so that solves our problem.

Here's the conversion of the applicative version of sequence to Scala…

def applicativeSequence[Z,E,A](ios: List[ZIO[Z, E, A]]): ZIO[Z, E, List[A]] = { ios match { case Nil => ZIO.succeed(List.empty[A]) case c :: cs => val ff: ZIO[Z,E, A => (List[A] => List[A])] = zioApplicative.pure(((a: A) => (listA: List[A]) => a +: listA)) val p1 = ff.ap(c) p1.ap(applicativeSequence(cs)) } }

It's a little bit noisier than the Haskell code, but most of that is having to be more verbose about the types to keep the type checker happy. In fact the parts of each implementation match up together.

Now we can run that and you will see that the effects are now parellelised and our sleep sort works!

Applicative version Preparing to say number in 6 seconds Preparing to say number in 2 seconds Preparing to say number in 1 seconds Preparing to say number in 3 seconds Preparing to say number in 8 seconds Preparing to say number in 4 seconds Preparing to say number in 7 seconds Preparing to say number in 5 seconds 1 2 3 4 5 6 7 8

Note that the point the authors were making here was just to show that the

sequence function is a pattern that came up often, that could be more

succinctly expressed with ap. Showing that it also enables our effects to run

in parallel, given the correct implementation, was just to show one of the

benefits of avoiding Monad when effects are not dependent on each other.

Matrix Transposition

The second example in the paper is that of Matrix transposition, which takes a matrix and flips it along a diagonal. For example…

Original matrix 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 Transposed matrix 1 6 11 2 7 12 3 8 13 4 9 14 5 10 15

In Haskell, we first see this implememtation of transpose…

transpose :: [[a ]] → [[a ]] transpose [ ] = repeat [ ] transpose (xs : xss) = zipWith (:) xs (transpose xss) repeat :: a → [a ] repeat x = x : repeat x

Let's translate this to Scala. The algorithm works by taking each row in turn and zipping it with each subsequent row.

First, we need to be careful about the function repeat which returns an

infinite number of whatever x is. This is used in the transpose for the last row

of the matrix where we want a number of empty lists to finish our recursion but

we don't know how many, so we want to just keep taking them. Since Haskell is by

default lazily evaluated this will work fine. In Scala as soon as we evaluate

repeat we will run into an infinite loop. That's easily fixed by switching to

LazyList which is part of the standard library. (Before Scala 2.13 it was

called Stream).

def repeat[A](a: A): LazyList[A] = a #:: repeat(a)

The function zipWith has the following type signature…

zipWith :: (a -> b -> c) -> [a] -> [b] -> [c]

In other words, it takes two lists and a pure function of two arguments, and creates a new list by applying the function to each element. It will stop once it runs out of elements in one of the lists. Here's the Scala version.

def zipWith[A, B, C](as: LazyList[A], bs: LazyList[B])( f: (A, B) => C): LazyList[C] = { as.zip(bs).map { case (a, b) => f(a, b) } }

With the pieces in place I can now implement the transpose as follows…

def transpose[A](matrix: LazyList[LazyList[A]]): LazyList[LazyList[A]] = { matrix match { case LazyList() => repeat(LazyList.empty) case xs #:: xss => zipWith(xs, transpose(xss)) { case (a, as) => a +: as } } }

The next step in the paper is to make this look a bit more applicative by

using a combination of repeat and zapp…

zapp :: [a → b ] → [a ] → [b ] zapp (f : fs) (x : xs) = f x : zapp fs xs zapp = [ ] transpose :: [[a ]] → [[a ]] transpose [ ] = repeat [ ] transpose (xs : xss) = repeat (:) ‘zapp‘ xs ‘zapp‘ transpose xss

Except for the noise of the repeats and zapps, this definition is in a fairly standard applicative style, even though we are working with vectors.

Evaluating Expressions

The third example of applicative style is an expression evaluator that can add numbers, both literals and numbers bound to strings and stored in an environment.

When implementing an evaluator for a language of expressions, it is customary to pass around an environment, giving values to the free variables.

The Haskell code looks like this…

data Exp v = Var v | Val Int | Add (Exp v) (Exp v) eval :: Exp v → Env v → Int eval (Var x ) γ = fetch x γ eval (Val i) γ = i eval (Add p q) γ = eval p γ + eval q γ

Converting to Scala is straightforward…

sealed trait Exp case class Val(value: Int) extends Exp case class Add(left: Exp, right: Exp) extends Exp case class Var(key: String) extends Exp case class Env[K](kv: Map[K,Int]) def fetch(key: String)(env: Env[String]) : Int = env.kv.getOrElse(key, 0) def eval(exp: Exp, env: Env[String]) : Int = { exp match { case Val(value) => value case Var(key) => fetch(key)(env) case Add(left, right) => eval(left, env) + eval(right, env) } }

Here I've made the environment a simple key value store, and, to avoid complicating the example with error handling, if a variable is not present in the environment I just default to returning zero.

Following the pattern of the previous two examples, the authors then pull some magic to make the applicative pattern more noticeable…

We can eliminate the clutter of the explicitly threaded environment with a little help from some very old friends, designed for this purpose

eval :: Exp v → Env v → Int eval (Var x ) = fetch x eval (Val i) = K i eval (Add p q) = K (+) ‘S‘ eval p ‘S‘ eval q where K :: a → env → a K x γ = x S :: (env → a → b) → (env → a) → (env → b) S ef es γ = (ef γ) (es γ)

So this all looks a bit cryptic. Who are the old friends? Well, if you look at

the type signature of K it is actually the pure function, and S

is the ap function. This is in fact what we'd call the Reader Monad in

Scala.

By old friends, the authors are referring to the SKI Combinator Calculus.

Let's reimplement in Scala using the Reader.

def fetchR(key: String) = Reader[Map[String,Int], Int](env => env.getOrElse(key, 0)) def pureR(value: Int) = Reader[Map[String,Int], Int](env => value) def evalR(exp: Exp): Reader[Map[String,Int], Int] = { exp match { case Val(value) => pureR(value) case Var(key) => fetchR(key) case Add(left, right) => val f = Reader((env:Map[String,Int]) => (a:Int) => (b:Int) => a + b) val leftEval = evalR(left).ap(f) evalR(right).ap(leftEval) } }

And take it for a test drive…

val env1 = Env(Map("x" -> 3, "y" -> 10)) val exp1 = Add(Val(10), Add(Var("x"), Var("y"))) println(s"Eval : ${eval(exp1, env1)}") // Eval : 23

The Applicative Type class

To summarize, we've seen three different effects used in applicative style; IO

(or ZIO), List and Reader. Now you can see why it makes sense to be able to

apply a function that is wrapped in these effects. What we needed, and got with

ap, is a way to lift a pure function so we can apply it to a chain of effects

of the same effect type.

Next in the paper, the authors describe the laws which an instance of the Applicative type class must adhere to, which is out of scope for this post but is put succinctly in English as follows…

The idea is that pure embeds pure computations into the pure fragment of an effectful world—the resulting computations may thus be shunted around freely, as long as the order of the genuinely effectful computations is preserved.

For more detail on the applicative laws check out chapter 12, section 5 of The Red Book

Applicatives are all Functors (hence the name Applicative Functors), because you can implement the map operation as follows…

// Declare map in terms of pure and ap def map[A,B,F[_]: Applicative](fa: F[A], f: A => B): F[B] = { Applicative[F].pure(f).ap(fa) } // Map a function over a list map(List(1,2,3,4,5), (a:Int) => a + 1) // res: List[Int] = List(2, 3, 4, 5, 6)

Note that you don't have to do this with Cats instances because all Applicatives have their Functor instance taken care of too.

The paper then notes that all uses of Applicatives follow this pattern of lifting a pure function and applying it to a chain of effects, and suggests a new syntax for shifting into the Idiom of the applicative functor. The syntax is a special pair of brackets…

[[ ff f1 f2 f3 ... fn ]]

Although this has not been widely adopted in either Haskell or Scala as far as I can tell, you can try it yourself using this delightfully named (and implemented) Scala library: Idiom Ears. This will let you closely match the syntax from the paper; for example…

val f = (a: Int) => (b: Int) => a * b ⊏| (f) (List(1, 2)) (List(3, 4)) |⊐ // List(3, 4, 6, 8) // Which is equivalent to Applicative[List].pure(f).ap(List(1,2)).ap(List(3,4))

If you do fall in love with the idiom brackets of McBride and Patterson then knock yourself out, but you may have to invest some time bringing the project back to life as it has suffered some bitrot since 2016. There is a demo of IdiomEars in the Github repository accompanying this post, but I simply copied the code into my project rather than spend time updating it.

Moving right along to Traverse

Have you noticed that sequence and transpose now look rather alike? The details that distinguish the two programs are inferred by the compiler from their types. Both are instances of the applicative distributor for lists.

At this point in the paper we have seen the birth of the Applicative type class

which encapsulates the ap and pure functions needed to implement the

patterns above. Next, the authors describe another new type class, Traverse,

which lets us generalize the pattern further…

dist :: Applicative f ⇒ [f a ] → f [a ] dist [ ] = ⊏| [ ] |⊐ dist (v : vs) = ⊏| (:) v (dist vs) |⊐

Note that I'm using the unicode from Idiom Ears to replace the fancy brackets from the paper which I cannot reproduce here, but you get the idea. Let's rewrite in Scala…

// applicative distributor for lists def dist[A, F[_]](fs: List[F[A]])(implicit app: Applicative[F]): F[List[A]] = { fs match { case Nil => app.pure(List.empty[A]) case c :: cs => val w1 = app.pure((a: A) => (listA: List[A]) => a +: listA) val w2 = w1.ap(c) w2.ap(dist(cs)) } } // dist a list of options println(dist(List(Option(10), Option(10), Option(3), Option(4)))) // Some(List(10, 10, 3, 4)) // Note that we have short circuiting println(dist(List(None, Option(10), Option(3), Option(4)))) // None

Note that this short-circuits. We fail as soon as a single None shows up. Why?

It's because although applicative allows us to avoid the enforced sequencing of

Monad's flatMap, many types have instances of ap implemented in terms of

flatMap anyway, because that matches the expectation of users for that type.

We could override the Cats instance for Option with our own. What we do instead

is create Applicative versions of type classes. For example, our monadic friend

Either (which represents an error or a success value) has an applicative

alter-ego Validated. Rather than short-circuit on failure, Validated allows us

to accumulate errors so we can provide valuable feeback ot the caller. That is one

of the super-powers of Applicatives!

val someValidateds: List[Validated[NonEmptyList[String],Int]] = (List("Had some error".invalidNel, 10.valid, "Another error".invalidNel, 4.valid)) // Try the same with Validated that has an Applicative instance println("Validated failure example: " + dist(someValidateds)) // Validated failure example: Invalid(NonEmptyList(Had some error, Another error))

Just by changing data types we have completely changed the behaviour from

short-circuiting to being able to accumulate the errors. Just imagine that these

are expensive computations or slow network calls, and you can see how avoiding

sequencing can really save us in computing costs, and thereby save us money.

Furthermore, we can improve user experience. We can validate a whole form from

the user at once and send all the corrections needed rather than necessitate a

painful back and forth until the whole form is valid. Now get back to dist.

Distribution is often used together with ‘map’.

Fair enough. The dist function we developed above would be enhanced in

usefulness if it could map a list of pure values into some effect type first.

Let's take a look at a poor way to implement that…

flakyMap :: (a → Maybe b) → [a ] → Maybe [b ] flakyMap f ss = dist (fmap f ss)

We can translate pretty much directly to Scala…

def flakyMap[A,B](f: A => Option[B], as: List[A]): Option[List[B]] = { dist(as.map(f)) } println("Flakymap success: " + flakyMap((n: Int) => Option(n * 2), List(1,2,3))) // Flakymap success: Some(List(2, 4, 6)) println("Flakymap failure: " + flakyMap((n: Int) => if(n%2==1) Some(n) else None, List(1,2,3))) // Flakymap failure: None

That's clearly useful, and it works, but it's flaky because we have to process

the list twice. First we map over the list to transform it, then we do it again

with the dist function. How about we do both at once? That's Traverse…

traverse :: Applicative f ⇒ (a → f b) → [a ] → f [b ] traverse f [ ] = ⊏| [ ] |⊐ traverse f (x : xs) = ⊏| (:) (f x ) (traverse f xs) |⊐

And a Scala version…

def listTraverse[A, B, F[_]](f: A => F[B], fs: List[A]) (implicit app: Applicative[F]): F[List[B]] = { fs match { case Nil => app.pure(List.empty[B]) case c :: cs => val w1 = app.pure((b: B) => (listB: List[B]) => b +: listB) val w2 = w1.ap(f(c)) w2.ap(listTraverse(f, cs)) } } // Output is the same as flakyMap

By providing the identity function for f we get the sequence function back

in terms of traverse…

def sequence[A, F[_]](fs: List[F[A]]) (implicit app: Applicative[F]): F[List[A]] = { listTraverse((fa: F[A]) => fa, fs) }

Finally, we get to the Traverse type class, which gives us an interface to write traverse for two effect types rather than just List and another effect. We have two functions, traverse and dist, which are represented in Scala today as traverse and sequence.

class Traversable t where traverse :: Applicative f ⇒ (a → f b) → t a → f (t b) dist :: Applicative f ⇒ t (f a) → f (t a) dist = traverse id

There's no need to show the Scala because we can rely on the implementations in the Cats library, but the instance implementations for list are as above. In the paper we see that you can also traverse more complex structures such as a tree…

sealed trait Tree[+A] case object Leaf extends Tree[Nothing] case class Node[A](left: Tree[A], a: A, right: Tree[A]) extends Tree[A] def treeTraverse[A, B, F[_]](f: A => F[B], fs: Tree[A]) (implicit app: Applicative[F]): F[Tree[B]] = { fs match { case Leaf => app.pure(Leaf) case Node(left, a, right) => val w1 = app.pure((l: Tree[B]) => (v: B) => (r: Tree[B]) => Node(l,v,r)) val w2 = w1.ap(treeTraverse(f,left)) val w3 = w2.ap(f(a)) w3.ap(treeTraverse(f,right)) } } val tree1 = Node(Leaf, 10, Node(Leaf, 5, Node(Leaf, 10, Leaf))) println("treeTraverse: " + treeTraverse((n: Int) => Option(n + 1), tree1)) // treeTraverse: Some(Node(Leaf,11,Node(Leaf,6,Node(Leaf,11,Leaf))))

Note that in your own code you would usually lean on the Traverse type class and override some methods to provide your own implementations.

Another thing to highlight the expressive power of traverse is that we can use

it to do a map just like a ~Functor by using the Id (identity) Monad as our

effect type. The Id monad simply wraps a pure value and has no other effect, so

we can use it to use traverse as a functor as follows…

@ List[Int](1,2,3).traverse((a: Int) => (1 + a): Id[Int]) // Id[List[Int]] = List(2, 3, 4)

Monoids are phantom Applicative functors

This section of the paper, part four if you are reading along, has an intriguing title. Whilst short, there is a lot of information in a small space on how we can use Monoids, Applicatives and Traverse to do some cool things. I will go much slower than the paper as some of the concepts take some time to get your head around.

Monoids are a type class that provides an interface to join things together such as appending strings or adding numbers. In addition, they give us a way to represent a zero value for the data type, which will be useful in a moment. If you want to dig into Monoids in more detail I have written a couple of posts on the subject…

- [[http://justinhj.github.io/2019/06/10/monoids-for-production.html][Monoids for Production [2019]]]

- [[https://medium.com/yoppworks-reactive-systems/persistent-entities-with-monoids-a44212a157fb][Persistent Entities with Monoids [2020]]]

Every Applicative is a Monoid

It's possible to implement a Monoid instance that works for any Applicative. In Scala it looks like this…

implicit def appMonoid[A: Monoid, F[_]: Applicative] = new Monoid[F[A]] { def empty: F[A] = Applicative[F].pure((Monoid[A].empty)) def combine(x: F[A], y: F[A]): F[A] = Applicative[F].map2(x,y)(Monoid[A].combine) }

What does this give us? We can join Applicative Effects togther, and when we do

so they are joined in the idiom of the effect type. So for example when

combining a list with its default Monoid instance it will simply append the

lists like this…

List(1,2,3) |+| List(4,5,6) // res: List[Int] = List(1, 2, 3, 4, 5, 6)

But if instead we bring into scope a monoid for List we get the applicative application instead…

implicit val m = appMonoid[Int, List] List(1,2,3) |+| List(4,5,6) // res: List[Int] = List(5, 6, 7, 6, 7, 8, 7, 8, 9)

Magical Folding with Traverse

It does not work the other way around, but some types with Monoid instances can

use those instances in their Applicative implementation. For example Tuple2 in

the Cats library does just that. The actual implementation is split into two

because of the way the Cats class hierarchy is organized, so here's a simplified

version where it is more clear what's going on…

implicit def appTuple2[X: Monoid] = new Applicative[Tuple2[X,?]] { def pure[A](a: A): (X, A) = (Monoid[X].empty, a) def ap[A, B](ff: (X, A => B))(fa: (X, A)): (X, B) = { (ff._1.combine(fa._1), ff._2(fa._2)) } }

You can see how the fact that Monoids have an empty (or zero) value is useful

here because when we implement pure we need a value of type X to build the

response but we only have an A. By using the Monoid.empty function we can

fulfil the contract.

The implementation of ap is also interesting. What happens is that the X values

(which have a Monoid instance), are simply combined. The new value B is created

by applying the function in ff to the value in fa and now we have our new

Tuple2.

Recall the function signature for traverse on a List…

def traverse[G[_]: Applicative, A, B](fa: List[A])(f: A => G[B]): G[List[B]]

The G in traverse is used to apply the function f to each element of our

collection, and so when we traverse and use Tuple2 as our G, it will use that

Monoid implementation we see above. Take a list and reducing it to a single

value is called various names including folding, reducing, crushing. In Scala

we'd typically fold a list of elements with a Monadic structure. We can now do

the same thing with traverse.

Traverse[List].traverse(List[Int](1,2,3))((n: Int) => Tuple2(n,n)) // res: (Int, List[Int]) = (6, List(1, 2, 3))

You can see that the resulting tuple consists of two things, the sum of the integers in the list (6) and second element is the list of results. That gives us the ability to transform a collection into a an aggregated (folded) value, and map it to a new collection at the same time!

Now often you may want to just fold the values and you don't care about the

other collection. In that case you could just drop it by calling ._2 on the

result.

There's a better way, and we can now move onto finding out about Phantom types and how they can help us here.

Phantom types

Now we can rejoin the paper. We are introduced the Accy type which is called

Const in Cats.

newtype Accy o a = Acc{acc :: o }

In Scala the Const type can be implemented like this…

final case class MyConst[A,B](unConst: A)

If it helps, this is a type level version of a function that takes two parameters; an A and a B, and just drops the B returning the A…

def const[A, B](a: A)(b: => B): A = a

Const, or Accy, is a strange-looking data type that takes two type parameters,

and in fact takes two values, but we only store the first. This is why the

second parameter is called a phantom. We can create a Const with any crazy

type we want for the B parameter because it won't be used at all…

import com.oracle.webservices.internal.api.message.MessageContext Const[Int,MessageContext](12).getConst // res: Int = 12

So what use is Const? For one, we can create an applicative functor for it just like we did with Tuple, but now we can drop the pretense that we cared about the second value and just get the folded value, saving us CPU time and memory as the computation progresses…

Traverse[List].traverse(List(1,2,3,4,5))(a => Const[Int, String](a)).getConst // res: Int = 15

Let's convert the examples in the paper of using this technique into Scala…

accumulate :: (Traversable t, Monoid o) ⇒ (a → o) → t a → o accumulate f = acc · traverse (Acc · f ) reduce :: (Traversable t, Monoid o) ⇒ t o → o reduce = accumulate id

def accumulate[A,F[_]: Traverse, B: Monoid](f: A => B)(fa: F[A]): B = { Traverse[F].traverse(fa)((a: A) => Const.of[B](f(a))).getConst } def reduce[F[_]: Traverse, A: Monoid](fa: F[A]): A = { Traverse[F].traverse(fa)((a: A) => Const.of[A](a)).getConst } // Accumulate println("accumulate: " + accumulate((s: String) => s.size)(List("ten", "twenty", "thirty"))) // 15 // Reduce println("reduce: " + reduce(List("ten", "twenty", "thirty"))) // tentwentythirty

Note that we could implement reduce with accumulate as follows…

def reduceWithAccumulate[F[_]: Traverse, A: Monoid](fa: F[A]): A = { accumulate[A, F, A](identity)(fa) }

We can also convert the following without much difficulty…

flatten :: Tree a → [a ] flatten = accumulate (:[ ]) concat :: [[a ]] → [a ] concat = reduce

Scala versions…

def treeFlatten[A](tree: Tree[A]): List[A] = { accumulate((a: A) => List(a))(tree) } def concatLists[A](fa: List[List[A]]): List[A] = { reduce(fa) } // Tree flattening println("treeFlatten: " + treeFlatten(tree1)) // treeFlatten: List(10, 5, 10) // Concat lists (flatten) println("concatLists: " + concatLists(List(List(1,2,3), List(4,5,6)))) // concatLists: List(1, 2, 3, 4, 5, 6)

The last thing in this section is an example of how to find if a an element in a list (or really anything we can Traverse) matches some predicate…

newtype Mighty = Might{might :: Bool}

instance Monoid Mighty where

∅ = Might False

Might x ⊕ Might y = Might (x ∨ y)

any :: Traversable t ⇒ (a → Bool) → t a → Bool

any p = might · accumulate (Might · p)

What's going on here is that we get a new type called Mighty which has a Monoid instance for it representing disjunction (boolean or). There is no default Monoid for boolean in Cats so we have to define one first.

implicit val mightyBoolean = new Monoid[Boolean] { def empty = false def combine(a: Boolean, b: Boolean) = a || b } Traverse[List].traverse(List(1,2,3,4,5))(a => if(a > 2) Const.of[Any](true) else Const.of[Any](false)) // res: Const[Boolean, List[Any]] = Const(true) Traverse[List].traverse(List(1,2,3,4,5))(a => if(a > 5) Const.of[Any](true) else Const.of[Any](false)) // res: Const[Boolean, List[Any]] = Const(false)

Instead of using boolean we can rely on the Integer addition boolean to count how many times a predicate is matched in a traversable structure…

Traverse[List].traverse(List(1,2,3,4,5))(a => if(a > 2) Const.of[Any](1) else Const.of[Any](0)) // res: Const[Int, List[Any]] = Const(3)

Comparing Monad with Applicative

We know that all Monads are Applicatives. Why? Because all Monads implement

pure and they can also implement ap as follows…

def ap[A, B](ff: List[A => B])(fa: List[A]) = { ff.flatMap(f => fa.map(f)) }

But all Applicatives are not Monads. For example you cannot implement flatMap for Const…

def flatMap[A,B](fa: MyConst[X,A])(f: A => MyConst[X,B]): MyConst[X,B] = { val x = fa.unConst val a = ??? // f(a) ??? }

We need an A to apply the function f, but there's no way to get one. Therefore Const is no Monad.

So now we know: there are strictly more Applicative functors than Monads. Should we just throw the Monad class away and use Applicative instead? Of course not! The reason there are fewer monads is just that the Monad structure is more powerful.

Next we contrast how Monad and Applicative differ in terms of a function called miffy (for doing a monadic if) and iffy (an applicative if)…

def miffy[A, F[_]: Monad](mb: F[Boolean], fa: F[A], fb: F[A]): F[A] = { mb.flatMap{ b => if(b) fa else fb } } def iffy[A, F[_]: Applicative](mb: F[Boolean], fa: F[A], fb: F[A]): F[A] = { Applicative[F].map3(mb, fa, fb){ case (cond, a, b) => if(cond) a else b } } // miffy(Option(true), Option(1), None) // res: Option[Int] = Some(1) // iffy(Option(true), Option(1), None) // res: Option[Int] = None

Here you can see that whilst miffy will succeed if the input is true even though the else effect failed (it was None). But with the applicative version we have to evaluate all the effects first, and if one of them fails they all fail.

The moral is this: if you’ve got an Applicative functor, that’s good; if you’ve also got a Monad, that’s even better! And the dual of the moral is this: if you want a Monad, that’s good; if you only want an Applicative functor, that’s even better!

Composing Applicatives

Not all Monads compose but all Applicatives do; the Applicative class is closed under composition.

instance (Applicative f ,Applicative g) ⇒ Applicative (f ◦ g) where pure x = Comp J (pure x ) K Comp fs ~ Comp xs = Comp J (~) fs xs K

What does it mean to compose an Applicative? It means that we get the effects of both. For example we can use the full suite of Applicative functionality on a List of Options…

val x: List[Option[Int]] = List(10.some, 9.some, 8.some) val y: List[Option[Int]] = List(7.some, 6.some, 5.some) Applicative[List].compose[Option].map2(x, y)(_ + _) // List[Option[Int]] = List(Some(17), Some(16), Some(15), // Some(16), Some(15), Some(14), // Some(15), Some(14), Some(13))

Accumulating Exceptions

In this section, it's noted that we could accumulate errors from computations using a type such as…

data Except err a = OK a | Failed err

You may recognize this as Scala's Either, which stores with an error or a

success in its Left and Right sides.

This could be used to collect errors by using the list monoid (as in unpublished work by Duncan Coutts), or to summarise them in some way.

This is in fact exactly what we did when looking at the Validated type above.

Applicative functors and Arrows

In this section, the paper discusses arrows which have some similarities with

the Applicative interface, but it's out of scope for purposes of this blog

post. I may come back to it in a future post.

Applicative functors, categorically

We now see a different, but equivalent way to define the Applicative class. Take a look at this Haskell code…

class Functor f ⇒ Monoidal f where unit :: f () (*) :: f a → f b → f (a, b)

This means given a type f with a Functor instance, we can define the class

Monoidal. unit is the same as pure, whilst the * function takes two effects

and returns a new effect with the result tupled. This is implemented in Cats for

Applicative's and known as product.

Applicative[Option].product(Option(22),Option(20)) // res: Option[(Int, Int)] = Some((22, 20))

Let's show that we can implement Applicative if we have the product function (assuming product is not implemented in terms of ap)…

override def ap[F[_], A, B](ff: F[A => B])(fa: F[A]) = { product(ff,fa).map { case (f, a) => f(a) } }

Applicative can be implemented with ap and pure, or pure and product. We'll see another choice later. Next in this section is some category theory which I'll also skip for now, leaving only this quote for your interest…

Fans of category theory will recognise the above laws as the properties of a lax monoidal functor for the monoidal structure given by products.

We applied ourselves!

That's the end of McBride and Patterson's paper; here are some conclusions they made…

- Applicative Functors have been identified

- They lie between Functor and Monad in power

- Unlike Monads, Applicatives are closed under composition

- Traverable Functors thread Applicative Applications and form a useful toolkit

The paper ends with a great quote that is both positive about borrowing ideas from category theory…

The explosion of categorical structure in functional programming: monads, comonads, arrows and now applicative functors should not, we suggest, be a cause for alarm. Why should we not profit from whatever structure we can sniff out, abstract and re-use? The challenge is to avoid a chaotic proliferation of peculiar and incompatible notations.

Plug for idiom brackets was snipped.

Back to Ap

So far in this post, we've seen lots of code that uses ap in various ways. We'll

wrap it up with some implementation notes on the useful function map2, and how

we can arrive at needing the ap function to do so. Then we'll look at a

practical example of using Applicative in image processing.

Let's start with a problem. We have two functions that return IO's as output. We want to call a pure function that takes two values as input. In short we need map2…

case class User(email: String, name: String, blocked: Boolean) case class Account(email: String, balance: Long) def getUser(email: String) = IO.sleep(10 seconds) *> IO(User("bob@gmail.com", "Bob Jones", false)) def getAccount(email: String) = IO.sleep(10 seconds) *> IO(Account("bob@gmail.com", 100)) def goodStanding(user: User, account: Account): Boolean = { user.blocked == false && account.balance >= 0 } val email = "bob@gmail.com" val checkBob = Applicative[IO.Par].map2( Par(getUser(email)), Par(getAccount(email)))(goodStanding) println("run bank check: " + Par.unwrap(checkBob).unsafeRunSync) // run bank check: true

So this motivating example works, it runs the (simulated) slow network calls in parallel and passes them to our function. (Note that this example is using Cats Effect and in order to select Applicative rather than Monadic operation we need to wrap the IO in the Par wrapper and then unwrap it at the end).

Given that map2 is a useful function how would we implement it? Just like we saw at the start of the paper, we can use flatMap and map to implement it quite easily…

def map2[A,B,C,F[_]: Monad](fa: F[A], fb: F[B])(f: (A,B) => C): F[C] = fa.flatMap { a => fb.map(b => f(a,b)) }

That works fine, but sadly it requires that we complete fa before starting and we want to allow independent effects. So we can't use Monad's flatMap. Let's build the function without it…

def applicativeMap2[A,B,C,F[_]: Applicative](fa: F[A], fb: F[B])(f: (A,B) => C) : F[C] = { val ffb = fa.map { a => (b: B) => f(a,b) } Applicative[F].ap(ffb)(fb) }

Obviously, there's no need to implement map2 because the Applicative instances

already have it, but it helps understand further the motivation for ap. The

value ffb is actually the result of currying the function f. What we get

back is of the form F[B => C]. We can then call that function using

Applicative's ap giving the correct response F[C].

By successively currying we can use the same trick to write map3, map4 and so on.

Map2… does it blend?

So far we've seen some interesting uses of Applicative; accumulating errors

from network calls, managing multiple concurrent effects, transposing matrices,

evaluating expressions. In 2019 I wrote a blog post about Comonads that used the

coflatMap operation to do image processing. One of the takeaways from that post

is that one of the great advantages of functional programming is composability.

One thing I'd like to be able to do is to do operations on two images then blend

them together. In my original code images are stored represented as a

FocusedGrid which is just an array of pixels and a focus point, which works

nicely for the coflatMap. The blend operation just needs a function to average

out the pixels and a map2…

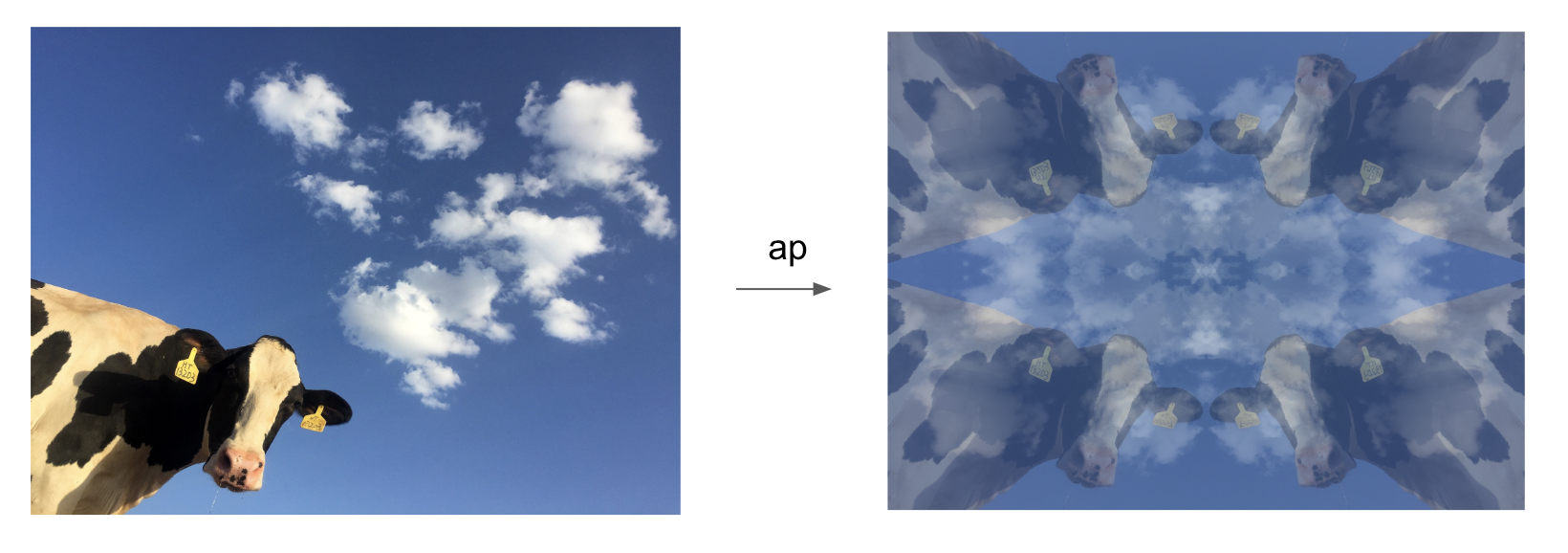

def blend(a: (Int, Int, Int), b: (Int, Int, Int)): (Int, Int, Int) = ((a._1 + b._1) / 2, (a._2 + b._2) / 2, (a._3 + b._3) / 2) val leftImage = originalImage2 val rightImage = originalImage2.coflatMap(mirrorHorizontal) val upImage = originalImage2.coflatMap(mirrorVertical) val downImage = rightImage.coflatMap(mirrorVertical) val leftAndRight = Applicative[FocusedGrid].map2(leftImage, rightImage)(blend _) val upAndDown = Applicative[FocusedGrid].map2(upImage, downImage)( val finalImage = leftAndRight.map2(upAndDown)(blend _))

These few lines are all that is needed in user code to create the cows image at the top of this post. If you want to know what the coflatMap is all about please checkout my earlier post Comonads for Life or the related talk at Scale by the Bay 2020 A gentle introduction to comonads.

But in the background we also need to implement an Applicative instance for the FocusedGrid datatype. Let's start with pure…

def pure[A](a: A): FocusedGrid[A] = FocusedGrid((0,0), Vector(Vector(a)))

Just like with the pure function for a List Applicative (or Monad), all we do is lift a pure value into a FocusedGrid with a singe row and column.

def ap[A, B](ff: FocusedGrid[A => B])(fa: FocusedGrid[A]): FocusedGrid[B] = { val newGrid = ff.grid.mapWithIndex { (row, i) => row.zip(fa.grid(i)).map { case (f, a) => f(a) } } FocusedGrid(ff.focus, newGrid) }

Remember that with List we implemented an Applicative instance that took all the functions in the input list and applied them in turn to each element in the list of parameters. Ap for FocusedGrid iterates over a grid of functions and applies them to the target row and column in the input parameter.

Our work is done; with pure and ap implemented we now have a fully working Applicative instance and that means map2 will work.

Post-mature optimisation

Most of the time, solving day to day business problems, we don't have to worry to much about performance, but when it comes to things like image processing when we are dealing with large numbers of pixels it can be more important to know what is going on under the hood, and when to step in and improve it. Profiling should be your guide, but if you look at the default implementation of map2 in Cats you can see that it is doing a lot of work that is not needed …

map(product(fa, fb))(f.tupled)

And product is …

ap(map(fa)(a => (b: B) => (a, b)))(fb)

So with a square image of size 2000 pixels (4 million total), to perform a map2 we are going to map of the image twice; once to create an image of curried functions and a second time to apply them. In addition, we're going to create a lot temporary data that we don't need.

Fortunately there's nothing to stop you from instead of using the default implementation of map2 we can implement our own…

override def map2[A, B, Z](fa: FocusedGrid[A], fb: FocusedGrid[B])(f: (A, B) => Z): FocusedGrid[Z] = { val faRowIter = fa.grid.iterator val fbRowIter = fb.grid.iterator val rowBuilder = Vector.newBuilder[Vector[Z]] while(faRowIter.hasNext && fbRowIter.hasNext) { val faColIter = faRowIter.next.iterator val fbColIter = fbRowIter.next.iterator val colBuilder = Vector.newBuilder[Z] while(faColIter.hasNext && fbColIter.hasNext) { colBuilder.addOne(f(faColIter.next, fbColIter.next)) } rowBuilder.addOne(colBuilder.result) } FocusedGrid(fa.focus, rowBuilder.result) }

Here we make a much more efficient implementation that instead of creating temporary data structures will use an iterator on each input grid and a Vector builder to efficiently build the output. This is maybe not the fastest implementation but it's certainly doing a lot less work. Of course optimizing without profiling is a waste of time. I had heard that Array is much faster than Vector for this kind of use case, so the sample code also includes an Array implementation. The code is somewhat ugly due to some technical constraints around Array, and turned out not to be any faster!

In any case, should you want to explore this further, the instructions are all

in the accompanying code to do you run your own benchmarks using jmh.

Here's the results from my own benchmarking…

| Benchmark | Mode | Cnt | Score | Error | Units | |

|---|---|---|---|---|---|---|

| FocusedGridArrayBench.withMap2Large | avgt | 5 | 171099.587 | ± | 65471.687 | us/op |

| FocusedGridArrayBench.withMap2Small | avgt | 5 | 54.418 | ± | 25.669 | us/op |

| FocusedGridBench.withMap2Large | avgt | 5 | 152831.655 | ± | 40709.521 | us/op |

| FocusedGridBench.withMap2Small | avgt | 5 | 59.168 | ± | 15.346 | us/op |

| FocusedGridBench.withSlowApLarge | avgt | 5 | 649442.291 | ± | 215248.298 | us/op |

| FocusedGridBench.withSlowApSmall | avgt | 5 | 141.025 | ± | 78.928 | us/op |

What is notable that optimized map2 is 4x faster on the large image than slowAp and the array version is actually slower than the vector version. Just goes to show, if performance matters then always benchmark your original code and your solution to make sure your assumptions are correct. There's also a balance between opimised code keeping your code easy to read and reason about.

Conclusion

You made it to the end! Congratulations, and I hope it made sense. Please feel free to contact me if you have any notes, corrections, improvements or suggestions!

This post was going to be a five-minute thing but blew up into a monster and there are still things that I didn't get to. Some things I may visit in the future:

- Composition - examples of composing Functors and Applicatives

- Arrows

- Laws of Applicatives (although they are covered nicely elsewhere)

- Category theory (esp. of lax monoidal functors in more depth)

References

Videos

Written word

- Applicative Programming with Effects McBride, Patterson 2008

- The Essence of the Iterator Pattern Gibbons, Bruno

- Do We Need Dependent Types? Fridlender

- Read a Paper: Applicative Programming with Effects

- Cats Applicative documentation

- Cats Const documentation

- Functional Programming in Scala aka The Red Book

- Functional Programmers for Mortals aka The Blue Book

Code

- applicatives (Scala conversion from the paper and lots of Applicative stuff)

- comonad (Image processing example with new Applicative demo)

- Scala Benchmarks (How to benchmark Scala by Colin Woodbury)

- Idiom Ears (Get those fancy ears in your applicative programming)

- Scala Workflow (A full on system for Monadic and Applicative programming)

Acknowledgements

- Thank you to the welcoming members of the Scala community who generously share their knowledge and libraries

- Thank you to Hermann Hueck for his suggestions and improvements on this post

© 2020 Justin Heyes-Jones. All Rights Reserved.